The Aggregator Stage:

Aggregator stage is a processing stage in datastage is used to grouping and summary operations.By Default Aggregator stage will execute in parallel mode in parallel jobs.

Note:In a Parallel environment ,the way that we partition data before grouping and summary will affect the results.If you parition data using round-robin method and then records with same key values will distruute across different partiions and that will give in correct results.

Aggregation Method:

Aggregator stage has two different aggregation Methods.

1)Hash:Use hash mode for a relatively small number of groups; generally, fewer than about 1000 groups per megabyte of memory.

2)Sort: Sortmode requires the input data set to have been partition sorted with all of the grouping keys specified as hashing and sorting keys.Unlike the Hash Aggregator, the Sort Aggregator requires presorted data, but only maintains the calculations for the current group in memory.

Aggregation Data Type:

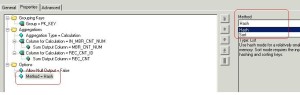

By default aggregator stage calculation output column is double data type and if you want decimal output then add following property as shown in below figure.

If you are using single key column for the grouping keys then there is no need to sort or hash partition the incoming data.

AGGREGATOR STAGE AND FILTER STAGE WITH EXAMPLE

If we have a data as below

table_a

dno,name

10,siva

10,ram

10,sam

20,tom

30,emy

20,tiny

40,remo

And we need to get the same multiple times records into the one target.

And single records not repeated with respected to dno need to come to one target.

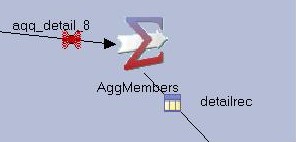

Take Job design as

Read and load the data in sequential file.

In Aggregator stage select group =dno

Aggregator type = count rows

Count output column =dno_cpunt( user defined )

In output Drag and Drop the columns required.Than click ok

In Filter Stage

----- At first where clause dno_count>1

-----Output link =0

-----At second where clause dno_count<=1 -----output link=0 Drag and drop the outputs to the two targets. Give Target file names and Compile and Run the JOb. You will get the required data to the Targets.

AGGREGATOR STAGE TO FIND NUMBER OF PEOPLE GROUP WISE

table_a

dno,name

10,siva

10,ram

10,sam

20,tom

30,emy

20,tiny

40,remo

And we need to get the same multiple times records into the one target.

And single records not repeated with respected to dno need to come to one target.

Take Job design as

Read and load the data in sequential file.

In Aggregator stage select group =dno

Aggregator type = count rows

Count output column =dno_cpunt( user defined )

In output Drag and Drop the columns required.Than click ok

In Filter Stage

----- At first where clause dno_count>1

-----Output link =0

-----At second where clause dno_count<=1 -----output link=0 Drag and drop the outputs to the two targets. Give Target file names and Compile and Run the JOb. You will get the required data to the Targets.

AGGREGATOR STAGE TO FIND NUMBER OF PEOPLE GROUP WISE

We can use Aggregator stage to find number of people each in each department.

For example, if we have the data as below

e_id,e_name,dept_no

1,sam,10

2,tom,20

3,pinky,10

4,lin,20

5,jim,10

6,emy,30

7,pom,10

8,jem,20

9,vin,30

10,den,20

Take Job Design as below

Seq.-------Agg.Stage--------Seq.File

Read and load the data in source file.

Go to Aggregator Stage and Select Group as Dept_No

and Aggregator type = Count Rows

Count Output Column = Count ( This is User Determined)

Click Ok ( Give File name at the target as your wish )

Compile and Run the Job

AGGREGATOR STAGE WITH REAL TIME SCENARIO EXAMPLE

For example, if we have the data as below

e_id,e_name,dept_no

1,sam,10

2,tom,20

3,pinky,10

4,lin,20

5,jim,10

6,emy,30

7,pom,10

8,jem,20

9,vin,30

10,den,20

Take Job Design as below

Seq.-------Agg.Stage--------Seq.File

Read and load the data in source file.

Go to Aggregator Stage and Select Group as Dept_No

and Aggregator type = Count Rows

Count Output Column = Count ( This is User Determined)

Click Ok ( Give File name at the target as your wish )

Compile and Run the Job

AGGREGATOR STAGE WITH REAL TIME SCENARIO EXAMPLE

Aggregator stage works on groups.

It is used for the calculations and counting.

It supports 1 Input and 1 Outout

Example for Aggregator stage

Input Table to Read

e_id, e_name, e_job,e_sal,deptno

100,sam,clerck,2000,10

200,tom,salesman,1200,20

300,lin,driver,1600,20

400,tim,manager,2500,10

500,zim,pa,2200,10

600,eli,clerck,2300,20

Here our requirement is to find the maximum salary from each dept. number.

According to this sample data, we have two departments.

Take Sequential File to read the data and take Aggregator for calculations.

And Take sequential file to load into the target.

That is we can take like this

Seq.File--------Aggregator-----------Seq.File

Read the data in Seq.Fie

And in Aggregator Stage ---In Properties---- Select Group =DeptNo

And Select e_sal in Column for calculations

i.e because to calculate maximum salary based on dept. Group.

Select output file name in second sequential file.

Now compile And run.

It will work fine.

It is used for the calculations and counting.

It supports 1 Input and 1 Outout

Example for Aggregator stage

Input Table to Read

e_id, e_name, e_job,e_sal,deptno

100,sam,clerck,2000,10

200,tom,salesman,1200,20

300,lin,driver,1600,20

400,tim,manager,2500,10

500,zim,pa,2200,10

600,eli,clerck,2300,20

Here our requirement is to find the maximum salary from each dept. number.

According to this sample data, we have two departments.

Take Sequential File to read the data and take Aggregator for calculations.

And Take sequential file to load into the target.

That is we can take like this

Seq.File--------Aggregator-----------Seq.File

Read the data in Seq.Fie

And in Aggregator Stage ---In Properties---- Select Group =DeptNo

And Select e_sal in Column for calculations

i.e because to calculate maximum salary based on dept. Group.

Select output file name in second sequential file.

Now compile And run.

It will work fine.

5 comments:

Hi,

I tried this one and have some questions.

If we have a data as below

table_a

dno,name

10,siva

10,ram

10,sam

20,tom

30,emy

20,tiny

40,remo

And we need to get the same multiple times records into the one target.

And single records not repeated with respected to dno need to come to one target.

My question:

I placed 2 seq files, one with count >1 and other with count <=1, 1 seq file output was this :

dno count

10 3

20 2

2 seq file output was like this:

dno count

40 1

30 1

Instead I wanted output like this:

dno name

10 siva

10 ram

10 sam

20 tom

20 tiny

2nd output file should be:

dno name

30 emy

40 remo

Can you pls help me derive this by using same agg + filter stages? I meant, based on the counts it should separate data as mentioned above.

Hi,

You can use copy and merge stage to get the following output.The stages are as:

Sequential file->copy->aggregator

copy->merge stage

then aggregator to merge stage

So merge stage will have two inputs one is from copy stage and one from aggregator

and then merge to filter stage and finally merge output to two sequential file

take Two jobs:- in job1 seq->Copy->Aggr->Filter->Seq

in copy stage take 2copies other is sequential file

build job2

Seq(1 copies_Out and reference is Filter_out)->LookUp->Seq(1 primary and 1 Reject o/p)

Hi Admin,

Aggregator stage is a stage which helped us to get the best solution in the fastest way. And this blog helped us a lot to get all solution.Thanks for sharing with us

Aggregator Stage

Post a Comment